-

The next time you visit a cafe to sip coffee and surf on some free Wi-Fi, try an experiment: Log in to some of your usual sites. Then, with a smile, hand the keyboard over to a stranger. Let them use it for 20 minutes. Remember to pick up your laptop before you leave.

While the scenario seems silly and contrived, it essentially happens each time you visit a site that doesn’t bother to encrypt the traffic to your browser — in other words, sites that neglect using HTTPS.

The encryption of HTTPS provides benefits like confidentiality, integrity, and identity. Your information remains confidential from prying eyes because only your browser and the server can decrypt the traffic. Integrity protects the data from being modified without your (or the site’s) knowledge. We’ll address identity in a bit.

There’s an important distinction between tweeting to the world or sharing thoughts on social media and having your browsing activity over unencrypted HTTP. You intentionally share tweets, likes, pics, and thoughts. The lack of encryption means you’re unintentionally exposing the controls necessary to share such things. It’s the difference between someone viewing your profile and taking control of your keyboard to modify that profile.

The Spy Who Sniffed Me

We most often hear about hackers attacking web sites, but it’s just as easy and lucrative to attack your browser. One method is to deliver malware or lull someone into visiting a spoofed site via phishing. Those techniques don’t require targeting a specific victim. They can be launched scattershot from anywhere on the web, regardless of the attacker’s geographic or network relationship to the victim. Another kind of attack, sniffing, requires proximity to the victim but is no less potent or worrisome.

Sniffing attacks watch the traffic to and from a victim’s web browser. (In fact, all of the computer’s traffic may be visible, but we’re only worried about web sites for now.) The only catch is that the attacker needs to be able to see the communication channel. The easiest way for them to do this is to sit next to one of the end points, either the web server or the web browser. Unencrypted wireless networks — think of cafes, libraries, and airports — make it easy to find the browser’s end point because the traffic is visible to anyone who can receive that network’s signal.

Encryption defeats sniffing attacks by concealing the traffic’s meaning from all except those who know the secret to decrypting it. The traffic remains visible to the sniffer, but it appears as streams of random bytes rather than HTML, links, cookies, and passwords. The trick is understanding where to apply encryption in order to protect your data. For example, wireless networks can be encrypted, but the history of wireless security is laden with egregious mistakes. And it’s not necessarily a sufficient solution.

The first wireless encryption scheme was called WEP. It was the security equivalent of Pig Latin. It seems secret at first. Then the novelty wears off once you realize everyone knows what ixnay on the ottenray means, even if they don’t know the movie reference. WEP required a password to join the network, but the protocol’s poor encryption exposed enough hints about the password that someone with a wireless sniffer could trivially reverse engineer it. This was a fatal flaw, because the time required to crack the password was a fraction of that needed to blindly guess the password with a brute force attack – a matter of hours (or less) instead of weeks (or centuries, as it should be).

Security improvements were attempted for Wi-Fi, but many turned out to be failures since they just metaphorically replaced Pig Latin with an obfuscation more along the lines of Klingon or Quenya, depending on your fandom leanings. The challenge was creating an encryption scheme that protected the password well enough that attackers would be forced to fall back to the inefficient brute force attack. The security goal is a Tower of Babel, with languages that only your computer and the wireless access point could understand — and which don’t drop hints for attackers. Protocols like WPA2 accomplish this far better than WEP ever did. WPA3 does even better.

We’ve been paying attention to public spaces, but the problem spans all kinds of networks. Sniffing attacks are just as feasible in corporate environments. They only differ in terms of threat scenarios. Fundamentally, HTTPS is required to protect the data transiting your browser.

S For Secure

Sites that neglect to use HTTPS are subverting the privacy controls you thought were protecting your data.

If my linguistic metaphors have left you with no understanding of the technical steps to execute sniffing attacks, rest assured the tools are simple and readily available. One from 2016 was a Firefox plugin called Firesheep. At the time, it enabled hacking for sites like Amazon, Facebook, Twitter, and others. The plugin demonstrated that technical attacks, regardless of sophistication, can be put into the hands of anyone who wishes to be mischievous, unethical, or malicious. Firesheep reduced the need for hacking skills to just being able to use a mouse.

To be clear, sniffing attacks don’t need to grab your password in order to negatively impact you. Login pages must use HTTPS to protect your credentials. If they then used HTTP (without the S) after you log in, they’re not protecting your privacy or your temporary identity.

We need to take an existential diversion here to distinguish between “you” as the person visiting a site and the “you” that the site knows. Sites speak to browsers. They don’t (yet?) reach beyond the screen to know that you are in fact who you say you are. The credentials you supply for the login page are supposed to prove your identity because you are ostensibly the only one who knows them. Sites need to maintain track of who you are and that you’ve presented valid credentials. So, the site sets a cookie in your browser. From then on, that cookie, a handful of bits, is your identity.

These identifying cookies need to be a shared secret – a value known only to your browser and the site. Otherwise, someone else could use that cookie to impersonate you.

S For Sometimes

Sadly, it seems that money and corporate embarrassment motivates protective measures far more often than privacy concerns. Many sites have chosen to implement a more rigorous enforcement of HTTPS connections called HTTP Strict Transport Security (HSTS). Paypal, whose users have long been victims of money-draining phishing attacks, was one of the first sites to use HSTS to prevent malicious sites from fooling browsers into switching to HTTP or spoofing pages. Like any good security measure, HSTS is transparent to the user. All you need is a browser that supports it (all do) and a site to require it (many don’t).

Improvements like HSTS should be encouraged. HTTPS is inarguably an important protection. However, the protocol has its share of weaknesses and determined attackers. Plus, HTTPS only protects against certain types of attacks; it has no bearing on cross-site scripting, SQL injection, or a myriad of other vulnerabilities. The security community is neither ignorant of these problems nor lacking in solutions. The lock icon on your browser that indicates a site uses HTTPS may be minuscule, but the protection it affords is significant.

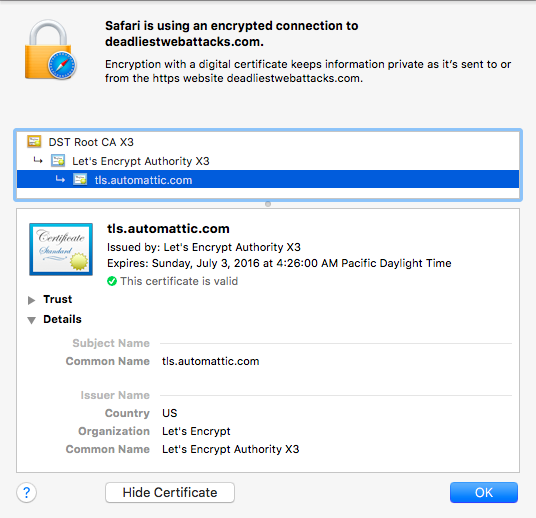

In the 2016 version of this article, the SSL Pulse noted only ~72% of the top 200K sites surveyed supported TLS 1.2, with 29% still supporting the egregiously insecure SSLv3. The Let’s Encrypt project started making TLS certs more attainable in late 2015.

In March 2023, the SSL Pulse has TLS 1.2 at ~100% of sites surveyed, with 2% stubbornly supporting SSLv3. Even better is that the survey sees 61% of sites supporting TLS 1.3. That progress is a successful combination of Let’s Encrypt, browsers dropping support for ancient protocols, and the push by HTTP/2 and HTTP/3 to have always-encrypted channels on modern TLS versions. Additionally, it reports that ~34% of sites support HSTS.

• • • -

The alphabetically adjacent domains when this site was hosted at WordPress included air fresheners, web security, and cats. Thanks to Let’s Encrypt, all of those now support HTTPS by default.

Even better, WordPress serves the Strict-Transport-Security header to ensure browsers adhere to HTTPS when visiting it. So, whether you’re being entertained by odors, HTML injection, or felines, your browser is encrypting traffic.

Let’s Encrypt makes this possible for two reasons. The project provides free certificates, which addresses the economic aspect of obtaining and managing them. Users who blog, create content, or set up their own web sites can do so with free tools. But the HTTPS certificates were never free and there was little incentive for them to spend money. To further compound the issue, users creating content and web sites rarely needed to know the technical underpinnings of how those sites were set up (which is perfectly fine!). Yet the secure handling and deployment of certificates requires more technical knowledge.

Most importantly, Let’s Encrypt addressed this latter challenge by establishing a simple, secure ACME protocol for the acquisition, maintenance, and renewal of certificates. Even when (or perhaps especially when) certificates have lifetimes of one or two years, site administrators would forget to renew them. It’s this level of automation that makes the project successful.

Hence, WordPress can now afford – both in the economic and technical sense – to deploy certificates for all the custom domain names it hosts. That’s what brings us to the cert for this site, which is but one domain in a list of SAN entries from deadairfresheners to a Russian-language blog about, inevitably, cats.

Yet not everyone has taken advantage of the new ease of encrypting everything. Five years ago I wrote about Why You Should Always Use HTTPS. Sadly, the article itself is served only via HTTP. You can request it via HTTPS, but the server returns a hostname mismatch error for the certificate, which breaks the intent of using a certificate to establish a server’s identity.

As with all things new, free, and automated, there will be abuse. For one, malware authors, phishers, and the like will continue to move towards HTTPS connections. The key point there being “continue to”. Such bad actors already have access to certs and to compromised financial accounts with which to buy them. There’s little in Let’s Encrypt that aggravates this.

Attackers may start looking for letsencrypt clients in order to obtain certs by fraudulently requesting new ones. For example, by provisioning a resource under a well-known URI for the domain (this, and provisioning DNS records, are two ways of establishing trust to the Let’s Encrypt CA).

Attackers may start accelerating domain enumeration via Let’s Encrypt SANs. Again, it’s trivial to walk through domains for any SAN certificate purchased today. This may only be a nuance for hosting sites or aggregators who are jumbling multiple domains into a single cert.

Such attacks aren’t proposed as creaky boards on the Let’s Encrypt stage. They’re merely reminders that we should always be reconsidering how old threats and techniques apply to new technologies and processes. For many ”astounding” hacks of today, there are likely close parallels to old Phrack articles or basic security principles awaiting clever reinterpretation for our modern times.

Finally, I must leave you with some sort of pop culture reference, or else this post wouldn’t befit the site. This is the 400th anniversary of Shakespeare’s death. So I shall leave you with a quote from Julius Caesar:

Nay, an I tell you that, Ill ne’er look you i’ the

face again: but those that understood him smiled at one

another and shook their heads; but, for mine own part, it

was Greek to me. I could tell you more news too: Marullus

and Flavius, for pulling scarfs off Caesar’s images, are

put to silence. Fare you well. There was more foolery

yet, if I could remember it.

May it take us far less time to finally bury HTTP and praise the ubiquity of HTTPS. We’ve had enough foolery of unencrypted traffic.

• • • -

Developers who wish to defend their code should be aware of Advanced Persistent Exploitability (APE). It’s a situation where broken code remains broken due to incomplete security improvements.

Code has errors. Writing has errors. Consider the pervasiveness of spellcheckers and how often the red squiggle complains about a misspelling in as common an activity as composing email.

Mistakes happen. They’re a natural consequence of writing, whether code, blog, email, or book. The danger here is that mistakes in code lead to vulns that can be exploited.

Sometimes coding errors arise from a stubborn refusal to acknowledge fundamental principles, as seen in the Advanced Persistent Ignorance that lets SQL injection persist more than a decade after programming languages first provided countermeasures. Anyone with sqlmap can trivially exploit that ancient vuln by now without bothering to know how they’re doing so.

Other coding errors stem from misdiagnosing a vuln’s fundamental cause – the fix addresses an exploit example as opposed to addressing the underlying issue. This failure becomes more stark when the attacker just tweaks an exploit payload in order to compromise the vuln again.

We’ll use the following PHP snippet as an example. It has an obvious flaw in the

argparameter:<?php $arg = $_GET['arg']; $r = exec('/bin/ls ' . $arg); ?>Confronted with an exploit that contains a semi-colon to execute an arbitrary command, a dev might choose to apply input validation. Doing so isn’t necessarily wrong, but it’s potentially incomplete.

Unfortunately, it may also be a first step on the dangerous path of the ”Clever Factor”. In the following example, the dev intended to allow only values that contained alpha characters.

<?php $arg = $_GET['arg']; # did one better than escapeshellarg if(preg_match('/[a-zA-Z]+/', $arg)) { $r = exec('/bin/ls ' . $arg); } ?>As a first offense, the regex should have been anchored to match the complete input string, such as

/^[a-zA-Z]+$/or/\A[a-z][A-Z]+\Z/.That mistake alone should worry us about the dev’s understanding of the problem. But let’s continue the exercise with three questions:

- Is the intention clear?

- Is it resilient?

- Is it maintainable?

This developer declared they “did one better” than the documented solution by restricting input to mixed-case letters. One possible interpretation is that they only expected directories with mixed-case alpha names. A subsequent dev may point out the need to review directories that include numbers or a dot (

.) and, as a consequence, relax the regex. That change might still be in the spirit of the validation approach (after all, it’s restricting input to expectations), but if the regex changes to where it allows a space or shell metacharacters, then it’ll be exploited. Again.This leads to resilience against code churn. The initial code might be clear to someone who understands the regex to be an input filter (albeit an incorrect one in its first incarnation). But the regex’s security requirements are ambiguous enough that someone else may mistakenly change it to allow metacharacters or introduce a typo that weakens it.

Additionally, what kind of unit tests accompanied the original version? Merely some strings of known directories and a few negative tests with

./and..in the path? None of those tests would have demonstrated the vulnerability or conveyed the intended security aspect of the regex.Code must be maintained over time. In the PHP example, the point of validation is right next to the point of usage (the source and sink in SAST terms). Think of this as the spatial version of the time of check to time of use flaw.

In more complex code, especially long-lived code and projects with multiple committers, the validation check could easily drift further and further from the location where its argument is used. This dilutes the original dev’s intention since someone else may not realize the validation context and re-taint or otherwise misuse the parameter – such as using string concatenation with other input values.

In this scenario, a secure solution isn’t even difficult. PHP’s documentation gives clear, prominent warnings about how to secure calls to the entire family of exec-style commands.

$r = exec('/bin/ls ' . escapeshellarg($arg));The recommended solution has favorable attributes:

- Clear intent – It escapes shell arguments passed to a command.

- Resilient – The PHP function will handle all shell metacharacters, not to mention the character encoding like UTF-8.

- Easy to maintain – Whatever manipulation the

$argparameter suffers throughout the code, it will be properly secured at its point of usage.

It also requires less typing than the back-and-forth of multiple bug comments required to explain the pitfalls of regexes and the necessity of robust defenses. Securing code against one exploit is not the same as securing it against an entire vuln class.

There are many examples of this “cleverness” phenomenon, from string-matching

alertto dodge XSS payloads to renaming files to avoid exploitation.What does the future hold for programmers?

Pierre Boule’s vacationing astronauts perhaps summarized it best in the closing chapter of La Planète des Singes:

Des hommes raisonnables ? … Non, ce n’est pas possible

May your interplanetary voyages lead to less strange worlds.

• • •