-

Developers who wish to defend their code should be aware of Advanced Persistent Exploitability (APE). It’s a situation where broken code remains broken due to incomplete security improvements.

Code has errors. Writing has errors. Consider the pervasiveness of spellcheckers and how often the red squiggle complains about a misspelling in as common an activity as composing email.

Mistakes happen. They’re a natural consequence of writing, whether code, blog, email, or book. The danger here is that mistakes in code lead to vulns that can be exploited.

Sometimes coding errors arise from a stubborn refusal to acknowledge fundamental principles, as seen in the Advanced Persistent Ignorance that lets SQL injection persist more than a decade after programming languages first provided countermeasures. Anyone with sqlmap can trivially exploit that ancient vuln by now without bothering to know how they’re doing so.

Other coding errors stem from misdiagnosing a vuln’s fundamental cause – the fix addresses an exploit example as opposed to addressing the underlying issue. This failure becomes more stark when the attacker just tweaks an exploit payload in order to compromise the vuln again.

We’ll use the following PHP snippet as an example. It has an obvious flaw in the

argparameter:<?php $arg = $_GET['arg']; $r = exec('/bin/ls ' . $arg); ?>Confronted with an exploit that contains a semi-colon to execute an arbitrary command, a dev might choose to apply input validation. Doing so isn’t necessarily wrong, but it’s potentially incomplete.

Unfortunately, it may also be a first step on the dangerous path of the ”Clever Factor”. In the following example, the dev intended to allow only values that contained alpha characters.

<?php $arg = $_GET['arg']; # did one better than escapeshellarg if(preg_match('/[a-zA-Z]+/', $arg)) { $r = exec('/bin/ls ' . $arg); } ?>As a first offense, the regex should have been anchored to match the complete input string, such as

/^[a-zA-Z]+$/or/\A[a-z][A-Z]+\Z/.That mistake alone should worry us about the dev’s understanding of the problem. But let’s continue the exercise with three questions:

- Is the intention clear?

- Is it resilient?

- Is it maintainable?

This developer declared they “did one better” than the documented solution by restricting input to mixed-case letters. One possible interpretation is that they only expected directories with mixed-case alpha names. A subsequent dev may point out the need to review directories that include numbers or a dot (

.) and, as a consequence, relax the regex. That change might still be in the spirit of the validation approach (after all, it’s restricting input to expectations), but if the regex changes to where it allows a space or shell metacharacters, then it’ll be exploited. Again.This leads to resilience against code churn. The initial code might be clear to someone who understands the regex to be an input filter (albeit an incorrect one in its first incarnation). But the regex’s security requirements are ambiguous enough that someone else may mistakenly change it to allow metacharacters or introduce a typo that weakens it.

Additionally, what kind of unit tests accompanied the original version? Merely some strings of known directories and a few negative tests with

./and..in the path? None of those tests would have demonstrated the vulnerability or conveyed the intended security aspect of the regex.Code must be maintained over time. In the PHP example, the point of validation is right next to the point of usage (the source and sink in SAST terms). Think of this as the spatial version of the time of check to time of use flaw.

In more complex code, especially long-lived code and projects with multiple committers, the validation check could easily drift further and further from the location where its argument is used. This dilutes the original dev’s intention since someone else may not realize the validation context and re-taint or otherwise misuse the parameter – such as using string concatenation with other input values.

In this scenario, a secure solution isn’t even difficult. PHP’s documentation gives clear, prominent warnings about how to secure calls to the entire family of exec-style commands.

$r = exec('/bin/ls ' . escapeshellarg($arg));The recommended solution has favorable attributes:

- Clear intent – It escapes shell arguments passed to a command.

- Resilient – The PHP function will handle all shell metacharacters, not to mention the character encoding like UTF-8.

- Easy to maintain – Whatever manipulation the

$argparameter suffers throughout the code, it will be properly secured at its point of usage.

It also requires less typing than the back-and-forth of multiple bug comments required to explain the pitfalls of regexes and the necessity of robust defenses. Securing code against one exploit is not the same as securing it against an entire vuln class.

There are many examples of this “cleverness” phenomenon, from string-matching

alertto dodge XSS payloads to renaming files to avoid exploitation.What does the future hold for programmers?

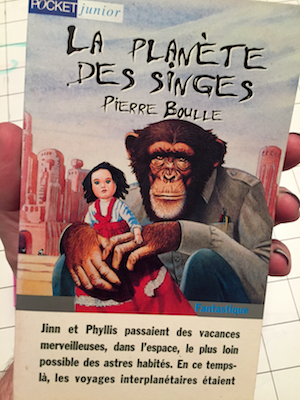

Pierre Boule’s vacationing astronauts perhaps summarized it best in the closing chapter of La Planète des Singes:

Des hommes raisonnables ? … Non, ce n’est pas possible

May your interplanetary voyages lead to less strange worlds.

• • • -

Just as there can be appsec truths, there can be appsec laws.

Science fiction author Arthur C. Clarke succinctly described the wondrous nature of technology in what has come to be known as Clarke’s Third Law (from a letter published in Science in January 1968):

Any sufficiently advanced technology is indistinguishable from magic.

The sentiment of that law can be found in an earlier short story by Leigh Brackett, “The Sorcerer of Rhiannon,” published in Astounding Science-Fiction Magazine in February 1942:

Witchcraft to the ignorant… Simple science to the learned.

With those formulations as our departure point, we can now turn towards crypto, browser technologies, and privacy.

The Latinate Lex Cryptobellum:

Any sufficiently advanced cryptographic escrow system is indistinguishable from ROT13.

Or in Leigh Brackett’s formulation:

Cryptographic escrow to the ignorant . . . Simple plaintext to the learned.

A few Laws of Browser Plugins:

Any sufficiently patched Flash is indistinguishable from a critical update.

Any sufficiently patched Java is indistinguishable from Flash.

A few Laws of Browsers:

Any insufficiently patched browser is indistinguishable from malware.

Any sufficiently patched browser remains distinguishable from a privacy-enhancing one.

For what are browsers but thralls to Laws of Ads:

Any sufficiently targeted ad is indistinguishable from chance.

Any sufficiently distinguishable person’s browser has tracking cookies.

Any insufficiently distinguishable person has privacy.

Writing against deadlines:

Any sufficiently delivered manuscript is indistinguishable from overdue.

Which leads us to the foundational Zeroth Law of Content:

Any sufficiently popular post is indistinguishable from truth.

• • • -

Here’s an HTML injection (aka cross-site scripting) example that’s due to a series of tragic assumptions that conspire to not only leave the site vulnerable, but waste lines of code doing so.

The first clue to the flaw lies in the querystring’s

stateparameter. The site renders thestatevalue into atitleelement. Naturally, a first test payload for HTML injection would be attempting to terminate that element. If that works, then a more impactful followup would be to append arbitrary markup such as<script>tags. A simple probe looks like this:https://web.site/cg/aLink.do?state=abc%3C/title%3EThe site responds by stripping the payload’s

</title>tag and all subsequent characters. Only the text leading up to the injected tag is rendered within thetitle.<HTML> <HEAD> <TITLE>abc</TITLE>This seems to have effectively countered the attack. Of course, if you’ve been reading this blog for a while, you’ll suspect this initial countermeasure won’t hold up – that which seems secure shatters under scrutiny.

The developers worried that an attacker might try to inject a closing

</title>tag. Consequently, they created a filter to watch for such payloads and strip them. This could be implemented as a basic case-insensitive string comparison or a trivial regex.And it could be bypassed by just a few characters.

Consider the following closing tags. Regardless of whether they seem surprising or silly, the extraneous characters are meaningless to HTML yet meaningful to our exploit because they belie the assumption that regexes make good parsers.

<%00/title> <""/title> </title""> </title id="">After inspecting how the site responds to each of the above payloads, it’s apparent that the filter only expected a so-called “good”

</title>tag. Browsers don’t care about an attribute on the closing tag. They’ll ignore such characters as long as they don’t violate parsing rules.Next, we combine the filter bypass with a payload. In this case, we’ll use an image

onerrorevent.https://web.site/cg/aLink.do?state=abc%3C/title%20id=%22a%22%3E%3Cimg%20src=x%20onerror=alert%289%29%3EThe attack works! We should have been less sloppy and added an opening

<TITLE>tag to match the newly orphaned closing one. A nice exploit won’t leave the page messier than it was before.<HTML> <HEAD> <TITLE>abc</title id="a"> <img src=x onerror=alert(9)> Vulnerable & Exploited Information Resource Center</TITLE>The tragedy of this flaw is that it shows how the site’s developers were aware of the concept of HTML injection exploits, but failed to grasp the underlying principles of the vuln. The effort spent blocking an attack (i.e. countering an injected closing tag) not only wasted lines of code on an incorrect fix, but instilled a false sense of security. The code became more complex and less secure.

The mistake also highlights the danger of assuming that well-formed markup is the only kind of markup. Browsers are capricious beasts. They must dance around typos, stomp upon (or skirt around) errors, and walk bravely amongst bizarrely nested tags. This syntactic havoc is why regexes are notoriously worse at dealing with HTML than proper parsers.

There’s an ancillary lesson here in terms of automated testing (or quality manual pen testing, for that matter). A scan of the site might easily miss the vuln if it uses a payload that the filter blocks, or doesn’t apply any attack variants. This is one way sites “become” vulnerable when code doesn’t change, but attacks do.

And it’s one way developers must change their attitudes from trying to outsmart attackers to focusing on basic security principles.

• • •